This AI Taught Itself to Play Go and Beat the Reigning AI Champion

Credit to Author: Daniel Oberhaus| Date: Wed, 18 Oct 2017 17:00:00 +0000

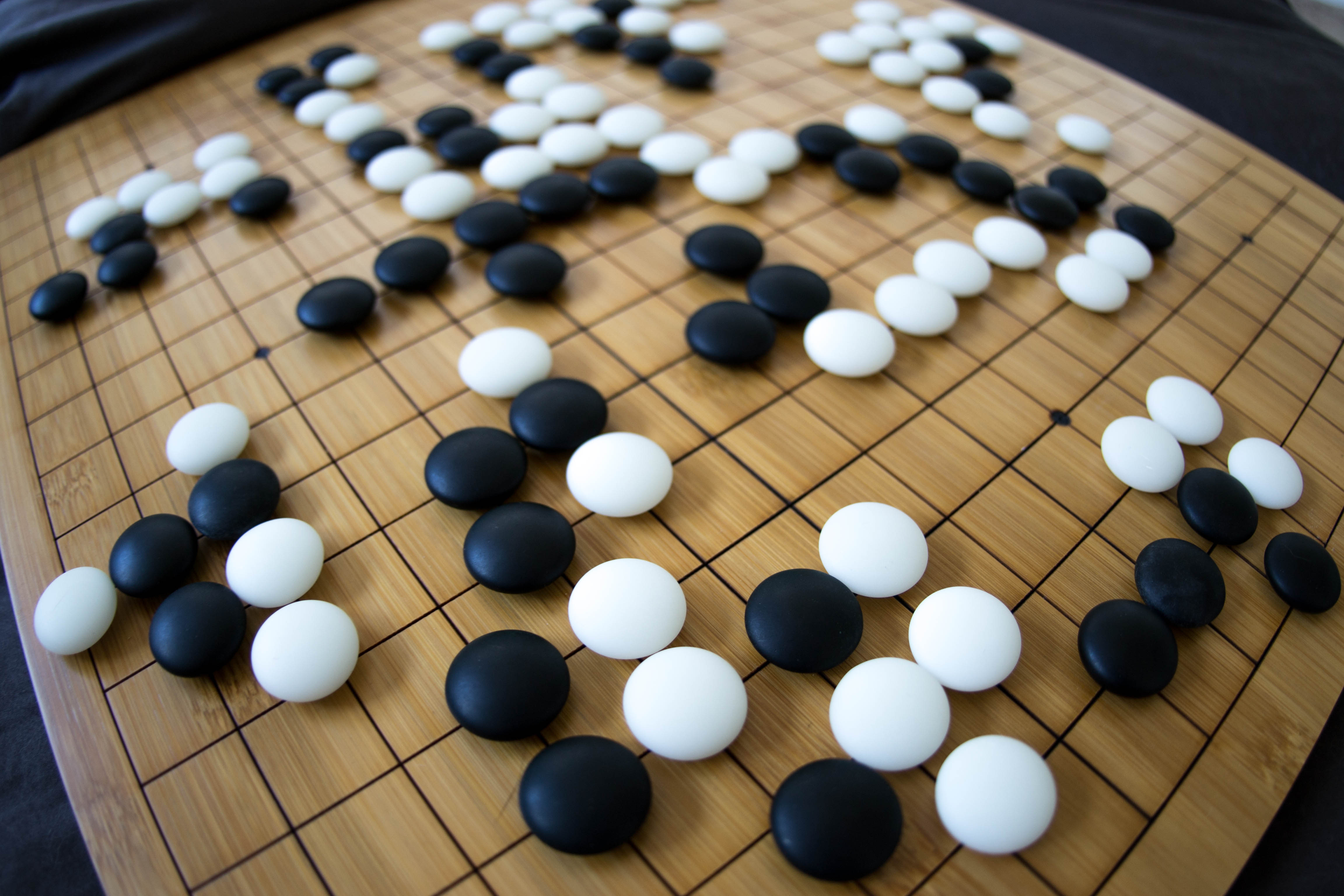

In early 2016, an artificial intelligence called AlphaGo shocked the world when it beat the reigning world champion in a series of Go games. Go is an ancient strategy game created in Asia where two players attempt to capture one another’s pieces and control territory on the board. In terms of difficulty and strategy, it’s kind of like chess on steroids. Although a computer beat the world chess master two decades ago, experts thought that it would be at least another decade before a computer could take on a Go champion, given the complexity and intuitive strategy required to master the game. AlphaGo’s victory over Go champion Lee Sedol marked a major step forward for artificial intelligence, but its creators weren’t finished.

As detailed today in Nature, DeepMind—the secretive Alphabet (née Google) subsidiary responsible for AlphaGo— managed to create an artificial intelligence that taught itself how to play Go without any human instruction. This new self-taught AI absolutely decimated last year’s algorithmic champion AlphaGo 100 games to 0.

Like its predecessor, this self-taught Go AI—known as AlphaGo Zero—is a neural network, a type of computing architecture modeled after the human brain. The original AlphaGo neural net, however, was programmed with the rules of Go and learned Go strategy through an iterative process.

According to a Deep Mind paper published last year in Nature, AlphaGo was actually the product of two neural nets, a “value network” to appraise the state of the board before a move, and a “policy network” to select its next move. These were trained by observing millions of expert human moves and playing thousands of games against itself, fine-tuning its strategy over the course of several months.

The new and improved AlphaGo Zero, on the other hand, only consists of a single neural net that started with knowledge of the Go board and pieces. Everything else it learned about the game, including the rules, was self-taught. Rather than studying expert human moves, AlphaGo Zero only games against itself. It started with a random move on the board and over the course of 4.9 million games was able to ‘understand’ the game so well that it beat AlphaGo in 100 straight games.

This is undoubtedly an impressive feat, but AlphaGo Zero is still a far cry from the general AI that haunts science fiction as the specter of human obsolescence. For all its prowess at Go, Deep Mind’s new neural net can’t make you a cup of tea or discuss the day’s weather—but it’s a portent of things to come.

Read More: How Garry Kasparov Learned to Stop Worrying and Love AI

Earlier this year, DeepMind researchers published two papers on arXiv that described AI architectures that the researchers hoped would pave the way for a general AI. The first paper detailed a neural net called CLEVR that was able to describe relationships between a static set of 3D objects, such as a ball or a cube. The second paper described a neural net that was capable of predicting the future state of a moving 2D object based on its past motion. Both neural nets outperformed other state-of-the-art models, and CLEVR was even able to outperform humans on some tasks.

DeepMind said neither of these neural network architectures were used to develop AlphaGo Zero, although the neural net developed for AlphaGo Zero will have applications far beyond board games.

“AlphaGo Zero shows superhuman proficiency in one domain, and demonstrates the ability to learn without human data and with less computing power,” a DeepMind spokesperson told me in an email. “We believe this approach may be generalisable to a wide set of structured problems that share similar properties to a game like Go, such as planning tasks or problems where a series of actions have to be taken in the correct sequence like protein folding or reducing energy consumption.”

The AI research at DeepMind has a clear trajectory: teaching machines how to ‘think’ more like a human. Cracking this problem will be the key to the development of general AI, and the work being done by DeepMind is baby steps in this direction. It’s tempting to hype up a self-taught algorithmic Go champion as a harbinger of the impending AI apocalypse, but to paraphrase Harvard computational neuroscientist Sam Gershman, a computer’s superhuman ability in one specific task is not the same thing as superhuman intelligence.

So until this superhuman computer intelligence arrives, enjoy getting your ass kicked at Go by a computer while you still can.

Get six of our favorite Motherboard stories every day by signing up for our newsletter .