Study Finds That Banning Trolls Works, to Some Degree

Credit to Author: Claire Downs| Date: Wed, 13 Sep 2017 15:39:51 +0000

On October 5, 2015, facing mounting criticism about the hate groups proliferating on Reddit, the site banned a slew of offensive subreddits, including r/Coontown and r/fatpeoplehate, which targeted Black people and those with weight issues.

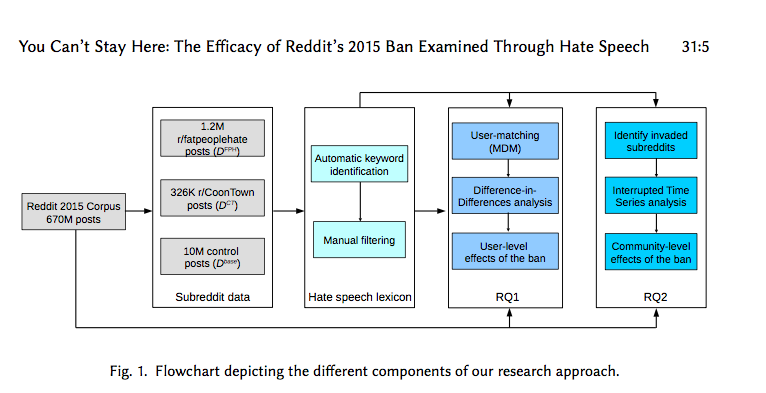

But did banning these online groups from Reddit diminish hateful behavior overall, or did the hate just spread to other places? A new study from the Georgia Institute of Technology, Emory University, and University of Michigan examines just that, and uses data collected from 100 million Reddit posts that were created before and after the aforementioned subreddits were dissolved. Published in the journal ACM Transactions on Computer-Human Interaction, the researchers conclude that the 2015 ban worked. More accounts than expected discontinued their use on the site, and accounts that stayed after the ban drastically reduced their hate speech. However, studies like this raise questions about the systemic issues facing the internet at large, and how our culture should deal with online hate speech.

ACM Transactions on Computer-Human Interaction, Vol 1. No. 2

First, the researchers automatically extracted words from the banned subreddits to create a dataset that included hate speech and community-specific lingo. In r/Coontown, this included racial slurs and “terms that frequently play a role in racist argumentation (e.g., ‘negro’, ‘IQ’, ‘hispanics’, ‘apes’.),” according to the paper. In r/fatpeoplehate, top terms included slurs (such as ‘fatties’ and ‘hams’) and words that contributed to fat shaming (e.g., ‘BMI’, ‘cellulite’) and “a cluster of terms that relate, self-referentially, to the practice of posting hateful content (e.g., ‘shitlording’, ‘shitlady’).”

The researchers looked at the accounts of users who were active on those subreddits and compared their posting activity from before and after those offensive subreddits were banned. The team was able to monitor upticks or drops in the hate speech across Reddit and if that speech had “migrated” to other subreddits as a result.

Read More: Can You Get Addicted to Trolling?

“The ban worked for Reddit,” the study’s abstract claims. “It succeeded on both a user and community level.”

According to the researchers, the data showed that following the ban, many more accounts discontinued their use of the site than expected: A combined 33 percent of r/fatpeoplehate users and 41 percent of r/Coontown users became inactive or deleted their accounts. Users who stayed decreased what study authors defined as hate speech on other subreddits by at least 80 percent, and when they migrated to other subreddits, those communities did not see significant increases in hate speech.

The study also concluded that the administrators’ swift shutdowns of duplicate hate groups after the ban helped squash the spread of hate speech and maintain control on this social network.

“For the banned community users that remained active, the ban drastically reduced the amount of hate speech they used across Reddit by a large and significant amount,” The study explains. Following the ban, Reddit saw a 90.63 percent decrease in the usage of fat-shaming terms (as defined by their data subset) by r/fatpeoplehate users, and an 81.08 percent decrease in the usage of racial slurs and racist language by r/CoonTown users (relative to their respective control groups).

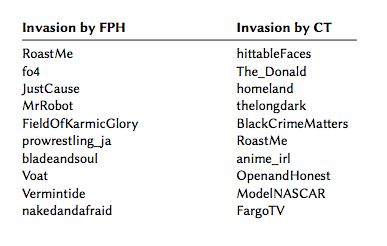

According to the researchers, instead of “spreading the infection” of hate speech to the rest of Reddit, users who remained active typically took their shitty opinions to groups where hate speech was already prevalent, like r/The_Donald and r/BlackCrimeMatters. Interestingly, a number of seemingly harmless TV fan groups like r/MrRobot, r/homeland, and r/FargoTV also saw an influx of users from those hate groups (although not an influence of hate speech).

ACM Transactions on Computer-Human Interaction, Vol 1. No. 2

While banning these racist subreddits is a good faith effort on Reddit’s part, the researchers did find evidence that some users simply packed up their abhorrent views and took them elsewhere on the internet to other social media sites like Voat, Snapzu, and Empeopled. The researchers explain: “For instance, in another ongoing study, we observed that 1,536 r/fatpeoplehate users have exact match usernames on Voat.co. The users of the Voat equivalents of the two banned subreddits continue to engage in racism and fat-shaming.”

While the behavior was diminished, the study admits that Reddit has made these users (from banned subreddits) “someone else’s problem” and that administrator’s actions “likely did not make the internet safer or less hateful.”

Beyond tracking where the hate speech goes, it’s important to note that the researchers do not know if users created multiple “shitposting accounts” specifically for the purposes of engaging in hate speech while keeping “normal” accounts that could reveal their identity. This makes tracking users harder. Within the dataset, it’s unclear how many total human beings we’re talking about, and if we’re even getting the full story of the migration of hate speech.

Whitney Phillips, an academic and digital media folklorist at Mercer University, has written extensively about the impacts of hate speech and trolling. Her perspective on the study is that while moderation is important and it’s good that Reddit is taking a stance, it’s important to think about the structural and systemic issues that this particular study reveals. “This is a great first step to take but it’s not the only step,” she told me. (Phillips was not involved in this research.) “If we think it’s hard enough to figure out what to do on specific individual platforms, how are we supposed to talk about this in relation to the entire internet as a whole?”

Phillips reminds us that the internet’s problem with hate speech is both broad and impossible, and it extends beyond Reddit. “Studies like this that are limited to a specific platform can create a sense of false security because it’s not looking at the whole landscape,” she said. “This is not an argument for not responding and not pushing people out of those sites, but it is calling attention to what do you do when your reach is limited and you know that the assholes are just beyond your grasp.”