5 things we learned at Kaspersky NEXT

Credit to Author: Alberto SD| Date: Fri, 18 Oct 2019 09:16:50 +0000

This year’s Kaspersky NEXT event showcased research and discussions from some of Europe’s most prominent experts in the field of cybersecurity and artificial intelligence. From machine learning and AI right through to securing the IoT, here are five things we learned about at this year’s event, which took place in Lisbon on October 14.

Using AI to make the world more fair

Have you ever thought about how many decisions machines have made today? Or how many of those decisions were based on gender, race, or background? Chances are you don’t even realize just how many decisions are made by artificial intelligence instead of a human. Kriti Sharma, Founder of AI for Good, explained that algorithms are being used all the time to make decisions about who we are and what we want.

Kriti also opined that what we really need to do to improve artificial intelligence is bring together people from all kinds of backgrounds, genders, and ethnicities. Here you can watch her Ted Talk about why human bias influences machine decision-making here:

The impact of assigning gender to AI

Think about Alexa, Siri, and Cortana. What do they all have in common? They use female voices and are made to be obedient servants. Why is that? Is technology reinforcing stereotypes? Two panel discussions about gender and AI investigated. Digital assistants have female voices because, as various studies determined, people feel more comfortable with female voices as digital assistants. Is that also a reflection of society?

So, what can be done to fight gender inequality in AI? Maybe use a gender-neutral voice, or perhaps create a gender for robots and AI. In addition to addressing this issue (and mentioned above), it’s also important to have more diverse teams creating AI, reflecting the diversity of the users it serves.

What voice would you prefer for your digital assistant?🗣️🎙️#Siri #Alexa #Cortana #KasperskyNEXT

— Kaspersky (@kaspersky) October 14, 2019

People trust robots more than they trust people

A social robot is an artificial intelligence system that interacts with humans and other robots — and that, unlike Siri or Cortana, has a physical presence. Tony Balpaeme, a professor of robotics at Ghent University, explained how issues surrounding robot and human interactions will rise as robots become more prevalent.

Balpaeme described some of the scientific experiments he and his team have run. For example, they tested humans’ versus robots’ ability to extract sensitive information (date of birth, childhood street or town, favorite color, etc.) that could be used to reset passwords. The funny thing is that robots are trusted more than people are, so people are more likely to give them sensitive information.

He also showed an experiment for physical intrusion. A group of people tasked with securing a building or area easily allow a cute robot into the area. There’s no reason to assume the robot is safe, but humans assume it’s harmless — a highly exploitable tendency. You can read more about social robotics and related experiments on Securelist.

Can adults be influenced by robots? 🤔🤖

Experiment: Gaining access to off-limit premises using tailgating.

Second try: Robots with a pizza gave 100% success 🤣🍕@TonyBelpaeme on #KasperskyNEXT pic.twitter.com/h79Ssz0Crp— Kaspersky (@kaspersky) October 14, 2019

AI will create very convincing deepfakes

David Jacoby, a Kaspersky GReAT member, talked about social engineering: chat bots, AI, and automated threats. With time, facial and voice recognition apps could be exploited through social engineering or other old-school methods.

Jacoby also went on to talk about deep fakes, which are becoming much more difficult to detect. How they may affect future elections and news broadcasts is a major concern.

The main concern, however, is trust. Once the technology is perfected, who will you trust? The solution, he pointed out, is better awareness: education as well as new technology to help users detect fake videos.

The state of security in robotics

Dmitry Galov, another Kaspersky GReAT member, talked about the ROS (Robot Operating System), a flexible framework for writing robot software. Like so many other systems, ROS was not created with security in mind, and naturally, it contains significant security issues. Before ROS-based products such as social robots and autonomous cars fly from university classrooms into consumers’ arms, creators must address those issues. Indeed, a new version of ROS is under heavy development. You can read more in Galov’s report.

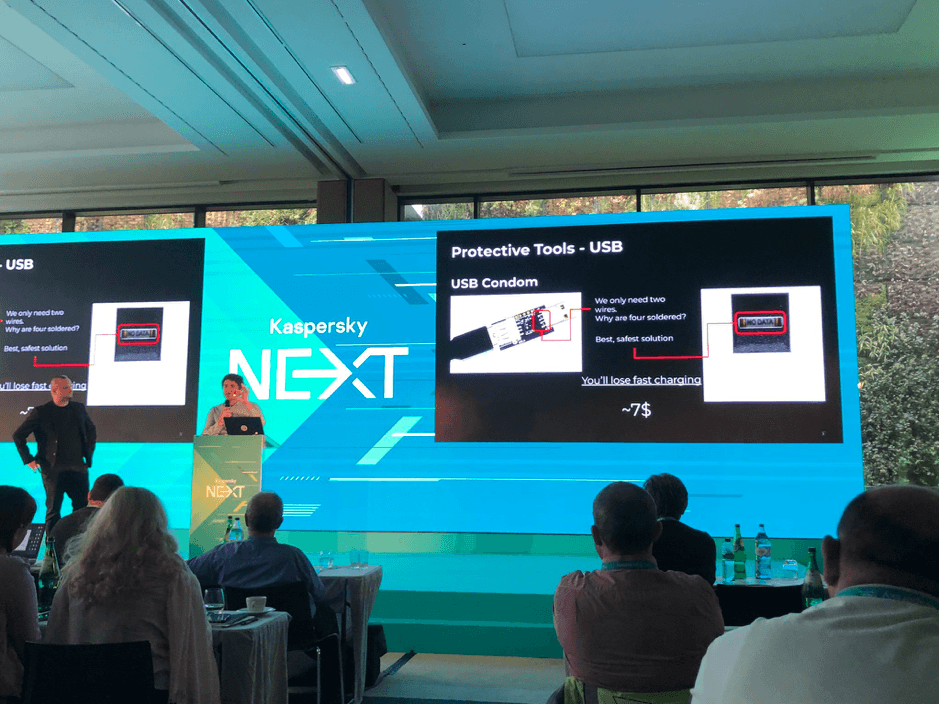

Bonus tip: Use USB condoms

Marco Preuss and Dan Demeter, members of Kaspersky’s GReAT, spoke about how people protect themselves from hacking while away on business trips. Have you considered that there could be hidden cameras, bugs, and two-way mirrors in your hotel room?

For the slightly paranoid, this post includes some travel security tips from Preuss and Demeter, who also spoke about the importance (if you are really paranoid) of using a USB “condom” — yes, they actually exist, and their usage is analogous to the normal ones: If you’re about to charge your phone using an unknown USB port, it’s worth protecting your device with this tiny gadget. Quite fortunately, unlike normal condoms, these are reusable.

To catch up on everything that went on during the day, head over to our Twitter account.